Fixing corrupt archive in Golang

Want to connect? Find me on LinkedIn or the Contact page.

Recently I had an issue sending JSON via API to our analytics tool. After a few days of troubleshooting, I realized what was the problem during the weekend when the file being uploaded was of lower size due to a smaller amount of data during weekends. Obviously, I decided to zip its contents, afterward facing a problem with a corrupted archive.

The process we use to upload data to our analytics tools is the following:

1. Query data from datastore (adding some filters, calculations etc.) for the last 24 hours

2. Upload file to GCP Storage (in case of failure, we can manually upload it)

3. Download file from GCP Storage, then send it via HTTP request

A cron job doing this runs every midnight. As mentioned earlier, one of the entities had trouble with exporting, due to its size.

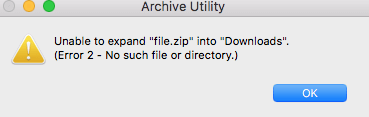

I decided to compress the file using archive/zip but had an issue with corrupted archives. On Mac, I would get the following error when trying to open the archive:

This is how I tried to compress my file:

func GetZip(c context.Context, path string) ([]byte, error) {

client, err := storage.NewClient(c)

if err != nil {

return nil, err

}

appID := appengine.AppID(c)

bucketH := client.Bucket(bucketName[appID])

// Downloading file from bucket (GCP Storage)

reader, err := bucketH.Object(path + "/~.json").NewReader(c)

if err != nil {

return nil, err

}

defer reader.Close()

bts, err := ioutil.ReadAll(reader)

// Fixing JSON issues we had

bts = bytes.TrimSuffix(bts, []byte(","))

content := bytes.NewReader(bts)

buf := new(bytes.Buffer)

w := zip.NewWriter(buf)

defer w.Close()

f, err := w.Create("report.json")

if err != nil {

return nil, err

}

_, err = f.Write([]byte("["))

if err != nil {

return nil, err

}

_, err = io.Copy(f, content)

if err != nil {

return nil, err

}

_, err = f.Write([]byte("]"))

if err != nil {

return nil, err

}

return buf.Bytes(), nil

}

Notice the highlighted line. Searching for a solution brought me to the test of archive/zip on golang.org, which instead of deferring closing the zip writer, manually closed it at the end. By making this little change to my code (err := w.Close() instead of defer w.Close()) I was able to fix my issue. At the end, this is what I got (minus the JSON alteration part):

func GetZip(c context.Context, path string) ([]byte, error) {

client, err := storage.NewClient(c)

if err != nil {

return nil, err

}

appID := appengine.AppID(c)

bucketH := client.Bucket(bucketName[appID])

reader, err := bucketH.Object(path + "/~.json").NewReader(c)

if err != nil {

return nil, err

}

defer reader.Close()

buf := new(bytes.Buffer)

w := zip.NewWriter(buf)

f, err := w.Create("report.json")

if err != nil {

return nil, err

}

_, err = io.Copy(f, reader)

if err != nil {

return nil, err

}

err = w.Close()

if err != nil {

return nil, err

}

return buf.Bytes(), nil

}

This occurs because explicitly closing the zip writer "finishes writing the zip file by writing the central directory". On the other hand, defers occur after the return statement, so the call to __buf.Bytes()__ is happening before the call to __Close()__ and therefore doesn't have the "central directory."